Technical SEO is the most important phase in the world of Search Engine Optimization.

The main goal of SEO is to get high-quality organic traffic through organic search. Without Technical SEO, it is impossible to make a place in SERP’s.

Advanced Technical SEO is still more important than ever. Here are some SEO checklists that are most important to rank a website.

Advanced Technical SEO Checklists

1. SEO-Friendly Web Hosting

Most of the website performance relays on web hosting. If you want to get rid of server problems, page timeouts, low site speed performance, and low bounce rate, you need to remember these points before purchasing a web hosting.

- Always choose a web hosting company with at least a 99.9% uptime guarantee.

- Choose web hosting that gives you SSL Certificate and create automatic backups.

- Responsive support team and offers 24/7 support.

- Check customer reviews before purchasing web hosting.

2. SSL Certificate

SSL stands for Secure Sockets Layer. SLL is a technology that creates a secure encrypted link between the browser and web servers.

Without an SSL certificate, mostly the website shows the following error to the user.

With an SSL certificate the website URL is enhanced with https which stands for Hypertext Transfer Protocol Secure.

3. Website Speed Performance

Website Speed Performance is the directly ranking factor. Search Engine prefers Fast load websites. The website which loads in 3 seconds that website considered as Perfect.

You can check your website speed performance on GTmetrix and Google Pagespeed Insights. These are the free tools to check speed performance.

Here are the following some ways to increase web speed performance.

- Good web hosting

- Upload web-optimized images

- Do JavaScript code in one file

- Do CSS code in one file

- Enable caching

- Enable Compression by using G-ZIP Tool

- Minify the CSS and JavaScript code

- Use CDNs (Content Delivery Networks

4. Fulfill Core Web Vitals – Page Experience

Google Core Web Vitals are based on three factors that help developers to understand how the users experience a web page.

Following are the three metrics included in core web vitals to your web page experience.

1. Cumulative Layout Shift (CLS):

CLS measures the stability of the elements on the webpage.

- Good CLS is less than or equal to 0.1 seconds

- Poor CLS is greater than 0.1 seconds

2. Largest Contentful Paint (LCP):

LCP measures the loading time of the largest contentful element on the webpage.

- Good LCP is less than or equal to 2.5 seconds

- Fair LCP is less than or equal to 4 seconds

- Poor LCP is greater than 4 seconds

3. First Input Delay (FID):

FID measures the time when a user first interacts with a webpage.

FID measures with Total Blocking Time (TBT)

- If TBT is less than or equal to 100 milliseconds that means FID is good.

- If TBT is less than or equal to 300 milliseconds that means FID is fair.

- If TBT is greater than 300 milliseconds that means FID is poor.

5. Resolve Duplicate Content Issues

There are two type of duplicate content both can create a lot of problems

On-site duplicate content: means having a same content on different URLs of a website.

Off-site duplicate content: means two or more different websites publish the same content.

Duplicate content may decrease your web ranking. you can handle the on-site duplicate content by using canonical tag. its tell the search engine about the main content of your website.

While if someone stole your content, contact to the owner with the link of original content and publishing date and ask to remove the content. Otherwise you are able to report this person.

6. Mobile-Friendly Website

According to the Statista 3.8 billion people are using smart phones. When someone make a query on search engine through mobile phone, Search Engine prefers Mobile friendly websites on the top.

So, you have to ensure that your website is responsive to any device and can adjust to any screen.

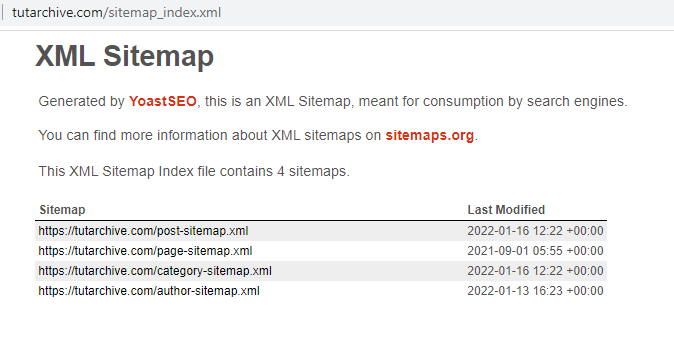

7. XML Sitemap

sitemap.xml is a file that contains all the URLs of your website. It’s like a roadmap that tells the search engine how to get all the content.

Sitemap.xml helps the search engine to crawl your content or website soon as possible. Search Engine can also crawl your website without sitemap submission.

Some CMS can generate sitemap.xml file automatically. you can generate your website sitemap by using sitemap generator.

you can view your website sitemap i.e. www.site.com/sitemap.xml. When the sitemap is created, you have to submit your sitemap link on Google Search Console.

8. Robot.txt File

Robot.txt is a file tells the search engine bots (also known as spiders or crawlers) which web pages are able to crawl and index.

Robot.txt file is important for a website. You can stop the search engine bots to crawling duplicate content or thin pages through robot.txt file.

If there are any mistakes in robots.txt file, some web pages may be are not indexing or create indexing mistakes. So, check your robot.txt file on the monthly basis with google robot testing tool, and learn how to create a robots.txt file.

Leave a Reply